Hate speech, online harassment and trolling are a big problem for users of social media. 30-40% of people in the UK have witnessed online abuse, and 10-20% of people have been personally targeted according to a 2019 report from The Alan Turing Institute.

Methods to reduce online abuse using artificial intelligence (AI) have largely focused on tools for automatic detection. Meta reports that 96% of all the hate speech they took action on in Q1 2022, was found before users reported it, with their automated (AI) classification systems playing a key role in identification and removal.

But is simply removing abuse or hate speech the best way of dealing with it? Might it be better to look at an approach which educates, changes beliefs and builds resistance online?

What is counterspeech?

Counterspeech is an approach which employs a direct response to hateful or harmful speech. It can take the form of text, graphics or video, and it offers benefits over moderation and removal including; supporting victims, protecting free speech and signalling to other social media users that the content is unacceptable. However, the volume of hate speech and online abuse currently impacting users means that creating counterspeech requires significant expertise and time, with many targeted communities lacking the resources to respond effectively.

Does counterspeech work?

A 2021 study has already found that empathy-based counterspeech could encourage Twitter users to delete their own racist posts. In the study, researchers randomly assigned 1,350 English-speaking Twitter users who had sent messages containing racist hate speech to one of four counterspeech strategies

- empathy,

- warning of consequences,

- humour and

- a control group.

In the empathy approach the counterspeech message was designed to elicit empathy, eg, “For African Americans, it really hurts to see people use language like this”; in the warning of consequences approach, the message was designed to warn the sender about potential social consequences of their tweet, eg, “Hey, remember that your friends and family can see this tweet too”; and in the humour-based approach memes were used with captions stating, eg, “It’s time to stop tweeting” or “Please stop tweeting.”

The study found that that the empathy-based counterspeech messages both increased the deletion of existing hate speech and reduced the future creation of more hate speech over a four week follow-up period. (There was no consistent effects for the strategies using humour or warning of consequences).

A new project aims to use AI to generate counterspeech

To examine whether AI could be used to generate counterspeech to fight abuse and hate the Turing Institute’s Online Safety Team has launched a new project to use AI to automatically detect problematic posts and generate counterspeech in response. Their approach will use AI models to identify what existing counterspeech looks like, and then automatically generate their own examples from scratch, which would help to make addressing online hate and abuse at scale much more easy. Their aim is to raise awareness of the benefits of having more content on social media platforms (i.e. through counterspeech) rather than less (i.e. through content moderation and removal).

The project is ongoing so it will be a while before there are any results to see if this approach is effective, but it looks like being an interesting case study of how AI could be used for public good to deal with the growing torrent of online abuse.

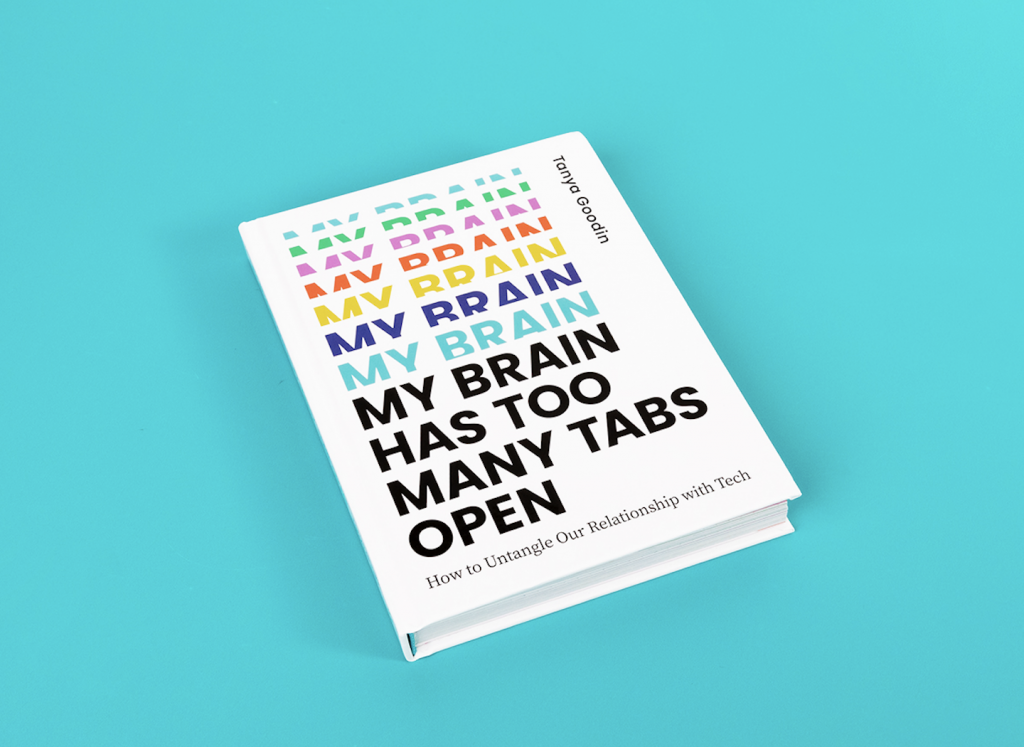

For more about how to deal with online abuse, hate speech and trolling – pick up a copy of my new book.