Imagine being on a Zoom call and the person the other end is getting real-time messages from an artificial intelligence (AI) that’s analysing your face and indicating when you’re bored, annoyed, or even lying. A creepy prospect, but one that sounds slightly far-fetched?

Well, no, this ’emotion AI’ is something Zoom is considering building into their product right now, with research currently underway on how they can do that.

So-called emotion AI works by using computer vision, facial recognition, speech recognition, natural-language processing and other artificial intelligence technologies to capture cues that represent people’s external expressions in an effort to match them to internal emotions, attitudes or feelings.

Emotion detection in sales

Being able to ‘read’ body language and decode someone’s unspoken feelings from their face has been part of a skilled sales person’s bag of tricks for centuries. But, sales and customer service software companies such as Uniphore and Sybill are now building products that go much further and use AI to help humans understand and respond to human emotion.

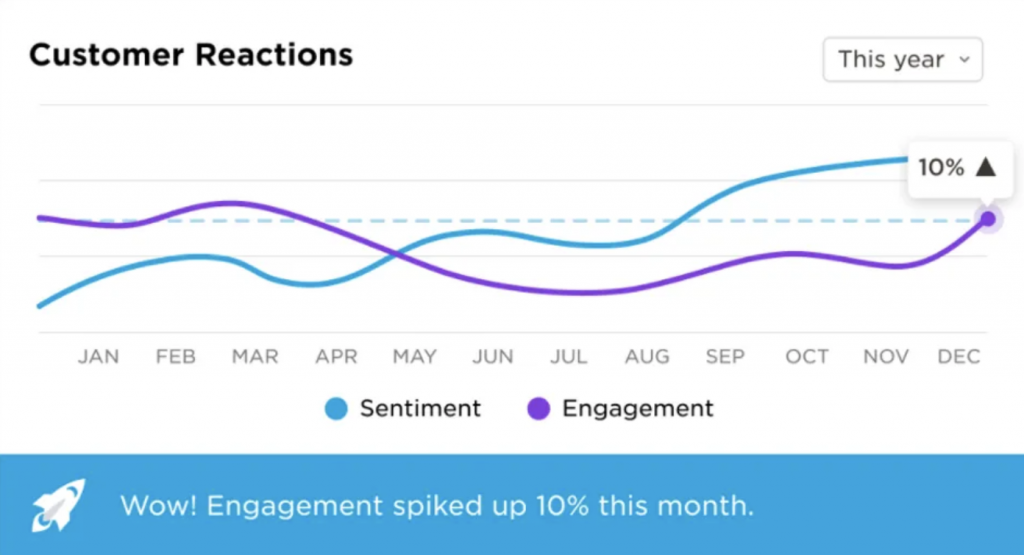

Uniphore sells software called Q for Sales that tries to detect whether a potential customer is interested in what a salesperson has to say during a video call, alerting the salesperson in real time during the meeting if someone seems more or less engaged in a particular topic. The software picks up on the behavioural cues associated with someone’s tone of voice, eye and facial movements or other non-verbal body language, then analyses that data to assess their ’emotional attitude’ and translates it into a digital scorecard.

Human rights and privacy concerns

In a sales environment, and as long as both parties are aware of what’s going on, AI used in this way may seem relatively benign. But incorporating emotion AI in such a widely used tool as Zoom has generated a huge backlash. More than 25 human and digital rights organisations including the American Civil Liberties Union, Electronic Privacy Information Center and Fight for the Future have sent a letter to Zoom demanding the company end their plans to incorporate these emotion AI features into their software.

“This software is discriminatory, manipulative, potentially dangerous and based on assumptions that all people use the same facial expressions, voice patterns, and body language,” wrote the groups in the letter sent on Wednesday to Eric Yuan, founder and CEO of Zoom.

‘Emotion AI’ is on shaky ground

The whole premise behind emotion AI – that a human’s interior emotional state can actually be deduced from their face and expressions – is an idea which is much disputed.

“Zoom’s use of this software gives credence to the pseudoscience of emotion analysis which experts agree does not work. Facial expressions can vary significantly and are often disconnected from the emotions underneath such that even humans are often not able to accurately decipher them,” the human and digital rights organisations’ letter to Zoom continued.

Kate Crawford, an AI ethics scholar, research professor at USC Annenberg and a senior principal researcher at Microsoft Research agrees. She cites a 2019 research paper that stated, “The available scientific evidence suggests that people do sometimes smile when happy, frown when sad, scowl when angry, and so on, as proposed by the common view – more than what would be expected by chance. Yet how people communicate anger, disgust, fear, happiness, sadness, and surprise varies substantially across cultures, situations, and even across people within a single situation.”

“The claim that a person’s interior state can be accurately assessed by analysing that person’s face is premised on shaky evidence.”

Kate Crawford, author ‘Atlas of AI’

Even the vice-president at Uniphore admits the limitations of the technology. “There is no real objective way to measure people’s emotions,” he said. “You could be smiling and nodding, and in fact, you’re thinking about your vacation next week.”

Emotion AI has also consistently come under fire for the serious ethical concerns it raises. In 2019, the AI Now Institute called for a ban on the use of emotion AI in significant decisions such as hiring and when judging student performance. In 2021, the Brookings Institution called for it to be banned for use by law enforcement.

So, should we worry?

AI-based features for assessing people’s emotional states are already showing up in remote classroom platforms like Intel’s ‘Class’ and will be mandatory in new EU vehicles from 2022 to detect driver distraction, signs of drunkenness and road rage. Like it or not these technologies are coming – many of them are here already.

Ethical guidelines mostly mandate vendors of this type of software to tell you if you are being monitored and/or analysed in any way (the ‘camera on’ and ‘recording’ indicators in Zoom for example). But, much like concerns over digital and social media, legislation largely lags technology across the globe. Exercising caution and asking how, and why, any screen based software is assessing your face is increasingly a wise move.

For more about keeping dealing with phone addiction, digital distractions and getting a better balance with your phone – pick up a copy of my new book.