A new study has found that robots (rather, AI) are even better at composing those strangely seductive emails promising a no-strings-attached transfer from a Nigerian bank account than humans are.

Before the study, it had been thought unlikely that scammers would have access to the necessary expertise to craft the kind of targeted scam emails that would generate high click-through rates. Huge volumes of emails are needed for a successful scam as clickthrough rates are, fortunately, typically very low.

But, something has changed to make things easier for scammers – widespread access to ‘AI-as-a-service’.

AI-as-a-service

For a long time, artificial intelligence (AI) was cost-prohibitive for most companies, let alone scammers, for several reasons:

- The computers needed were huge and expensive.

- AI developers were in demand and commanded high salaries.

- Many companies didn’t have access to big enough datasets to train AI models.

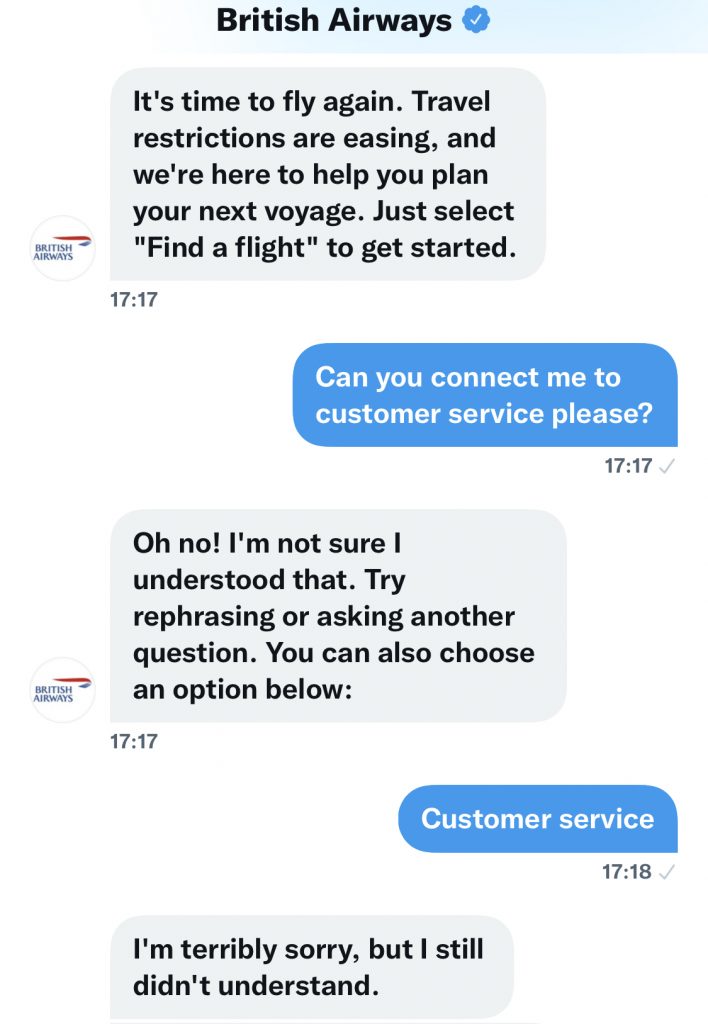

Now, however, there are thousands of AI-as-a-service applications that companies can buy off the shelf without needing to develop AI skills themselves. Customer service robots, or chatbots, used by most customer-facing businesses, are a good example of these.

How easy is it for scammers to use AI for crime?

Developing AI for use in scams previously required the same level of expertise and deep pockets which had been needed by businesses, presenting a significant barrier to entry for scammers.

“It takes millions of dollars to train a really good model, but once you put it on AI-as-a-service it costs a couple of cents and it’s really easy to use.”

Eugene Lim, GovTech Singapore cybersecurity specialist

In the recent phishing study however, the team from Singapore’s Government Technology Agency designed an experiment in which they sent phishing emails generated by an AI-as-a-service platform to 200 of their colleagues, alongside phishing emails they crafted themselves. Both types of messages contained links that were not scams but just logged clickthroughs. The researchers were surprised to discover that more people clicked the links in the AI-generated messages than the human-written ones – by a significant margin.

The Singaporean researchers used OpenAI‘s deep learning language model GPT-3, combined with AI-as-a-service products focused on personality analysis to produce their phishing emails. Machine learning based on personality analysis is designed to predict an individual’s mentality, based on inputs about their behaviour. This combination was used to precisely tailor phishing emails to fit their colleagues’ backgrounds and traits. They say that the results generated by the AI robots sounded “weirdly human”.

Should we be worried by scammers having access to AI?

In a word, ‘yes’. Access to AI turbo-charges the scale at which scammers can operate. And, as the study shows, could also potentially make scams more likely to succeed. Cybercrime activity increased exponentially during the pandemic and law enforcement agencies around the world are woefully under-prepared to deal with it. Of course, as any new field or technology appears, so do criminals looking to exploit it. But AI-as-a-service does seem to have made it easier for them.

My latest book My Brain Has Too Many Tabs Open is all about the ways in which technology is changing our behaviour – and what we can do about it.